Logistic regression is a statistical technique to find the association between the categorical dependent (response) variable and one or more categorical or continuous independent (explanatory) variables.

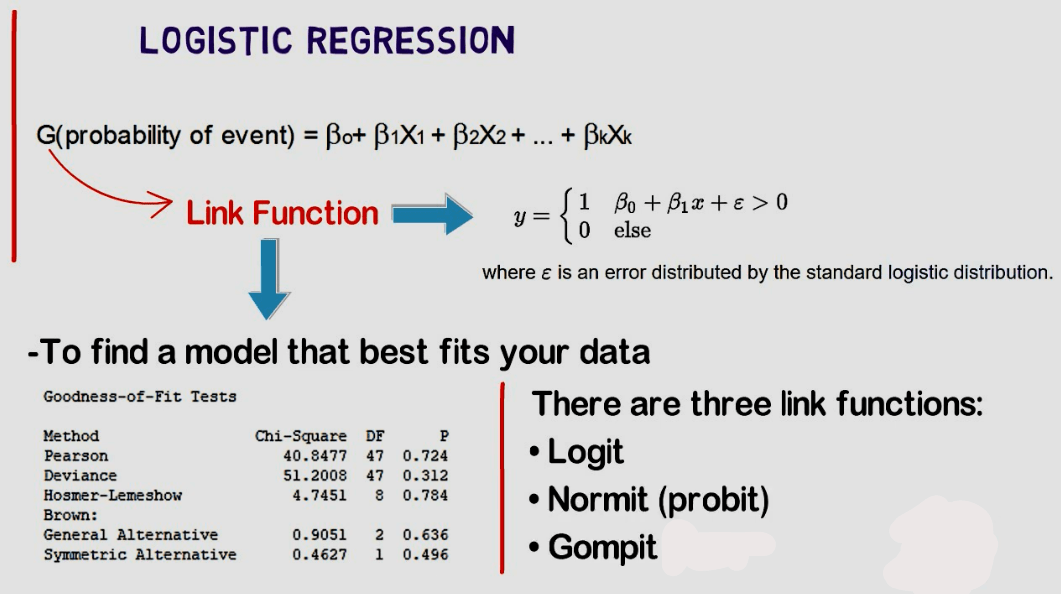

We can define the regression model as,

G(probability of event)=β0+β1x1+β2x2+…+βkxk

We determine G using the link function as following,

Y={1 ; β0+β1x1+ϵ>0

{0; else

There are three types of link functions. They are,

- Logit

- Normit (probit)

- Gombit

Why we use logistic regression?

We use it when there exists,

- One Categorical response variable

- One or more explanatory variable.

- No linear relationship between dependent and independent variables.

Assumptions of Logistic Regression

- The dependent variable should be categorical (binary, ordinal, nominal or count occurrences).

- The predictor or independent variable should be continuous or categorical.

- The correlation among the predictors or independent variable (multi-collinearity) should not be severe but there exists linearity of independent variables and log odds.

- The data should be the representative part of population and record the data in the order its collected.

- The model should provide a good fit of the data.

Logistic regression vs Linear regression

- In the case of Linear Regression, the outcome is continuous while in the case of logistic regression outcome is discrete (not continuous)

- To perform linear regression, we require a linear relationship between the dependent and independent variables. But to perform Logit we do not require a linear relationship between the dependent and independent variables.

- Linear Regression is all about fitting a straight line in the data while Logit is about fitting a curve to the data.

- Linear Regression is a regression algorithm for Machine Learning while Logit is a classification Algorithm for machine learning.

- Linear regression assumes Gaussian (or normal) distribution of the dependent variable. Logit assumes the binomial distribution of the dependent variable.

*Logit=logistic regression

Types

There are four types of logistic regression. They are,

- Binary logistic: When the dependent variable has two categories and the characteristics are at two levels such as yes or no, pass or fail, high or low etc. then the regression is called binary logistic regression.

- Ordinal logistic: When the dependent variable has three categories and the characteristics are at natural ordering of the levels such as survey results (disagree, neutral, agree) then the regression is called ordinal logistic regression.

- Nominal logistic: When the dependent variable has three or more categories but the characteristics are not at natural ordering of the levels such as colors (red, blue, green) then the regression is called nominal logistic.

- Poisson logistic: When the dependent variable has three or more categories but the characteristics are the number of time of an event occurs such as 0, 1, 2, 3, …, etc. then the regression is called Poisson logistic regression.

Advantages

| Logistic regression doesn’t require a linear relationship between dependent and independent variables. |

| The residuals don’t need to be normally distributed. |

| It is easier to implement, interpret, and very efficient to train. |

| It makes no assumptions about distributions of classes in feature space. |

| It can easily extend to multiple classes(multinomial regression) and a natural probabilistic view of class predictions. |

| It not only provides a measure of how appropriate a predictor(coefficient size)is, but also its direction of association (positive or negative). |

| It is very fast at classifying unknown records. |

| Good accuracy for many simple data sets and it performs well when the dataset is linearly separable. |

| It can interpret model coefficients as indicators of feature importance. |

| It is less inclined to over-fitting but it can overfit in high dimensional datasets. One may consider Regularization (L1 and L2) techniques to avoid over-fitting in these scenarios. |

Disadvantages

| If the number of observations is lesser than the number of features, Logistic Regression should not be used, otherwise, it may lead to overfitting. |

| It constructs linear boundaries. |

| The major limitation is the assumption of linearity between the dependent variable and the independent variables. |

| It can only be used to predict discrete functions. Hence, the dependent variable is bound to the discrete number set. |

| Non-linear problems can’t be solved with logistic regression because it has a linear decision surface. Linearly separable data is rarely found in real-world scenarios. |

| It requires average or no multi-collinearity between independent variables. |

| It is tough to obtain complex relationships using logistic regression. More powerful and compact algorithms such as Neural Networks can easily outperform this algorithm. |

| In Linear Regression independent and dependent variables are related linearly. But it needs that independent variables are linearly related to the log odds (log(p/(1-p)). |

Applications

According to Wikipedia, there are some important applications,

Logistic regression is used in various fields, including machine learning, most medical fields, and social sciences. For example, the Trauma and Injury Severity Score (TRISS), which is widely used to predict mortality in injured patients, was originally developed by Boyd et al. using logistic regression. Many other medical scales used to assess the severity of a patient have been developed using logistic regression. Logistic regression may be used to predict the risk of developing a given disease (e.g. diabetes; coronary heart disease), based on observed characteristics of the patient (age, sex, body mass index, results of various blood tests, etc.). Another example might be to predict whether a Nepalese voter will vote Nepali Congress or Communist Party of Nepal or Any Other Party, based on age, income, sex, race, state of residence, votes in previous elections, etc. The technique can also be used in engineering, especially for predicting the probability of failure of a given process, system, or product. It is also used in marketing applications such as the prediction of a customer’s propensity to purchase a product or halt a subscription, etc. In economics, it can be used to predict the likelihood of a person’s choosing to be in the labor force, and a business application would be to predict the likelihood of a homeowner defaulting on a mortgage. Conditional random fields, an extension of logistic regression to sequential data, are used in natural language processing.

Try some fresh content:

- Time series analysis

- Business forecasting

- Hypothesis testing

- Logistic Regression

- Inference

- Experimental Design

- Correlation Analysis

- Data analysis using spss

- Multicollinearity